Deep Learning and machine learning-The best

Deep Learning

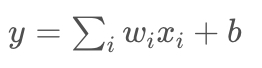

Deep learning is a sub-field of machine learning dealing with algorithms inspired by the structure and function of the brain called artificial neural networks. In other words, It mirrors the functioning of our brains. Deep learning algorithms are similar to how nervous system structured where each neuron connected each other and passing information.

Deep learning models work in layers and a typical model atleast have three layers. Each layer accepts the information from previous and pass it on to the next one.

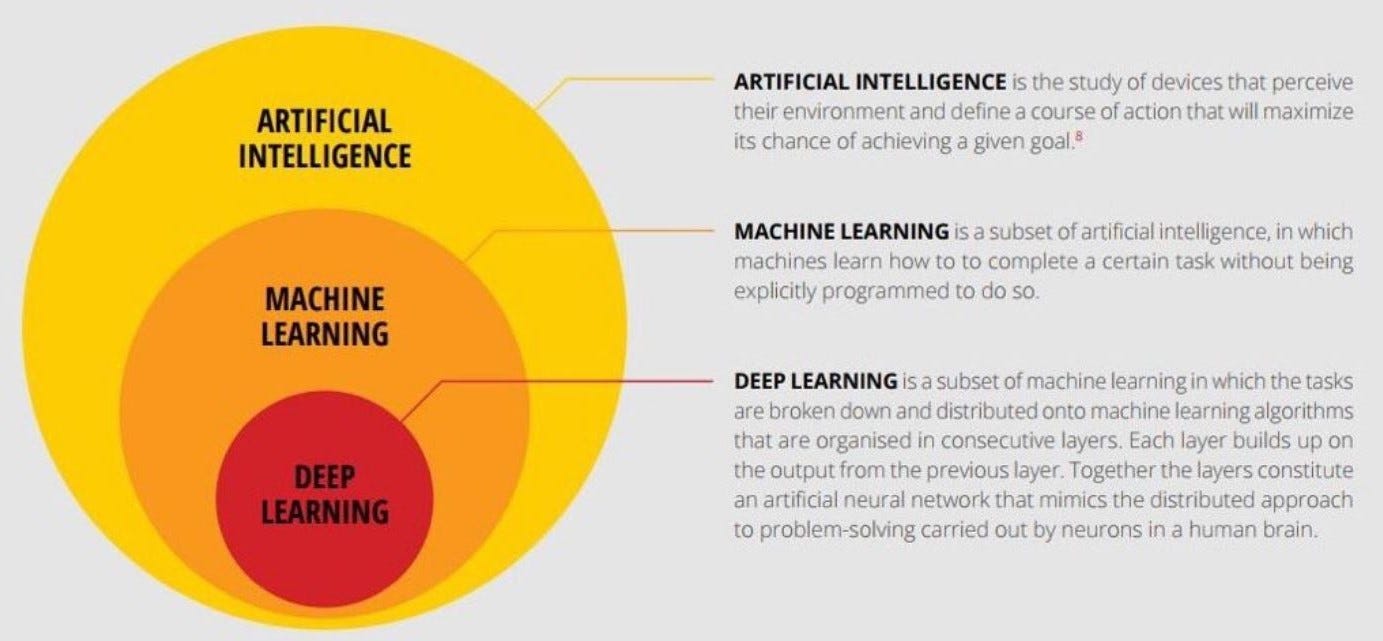

Deep learning models tend to perform well with amount ofdata wheras old machine learning models stops improving after a saturation point.

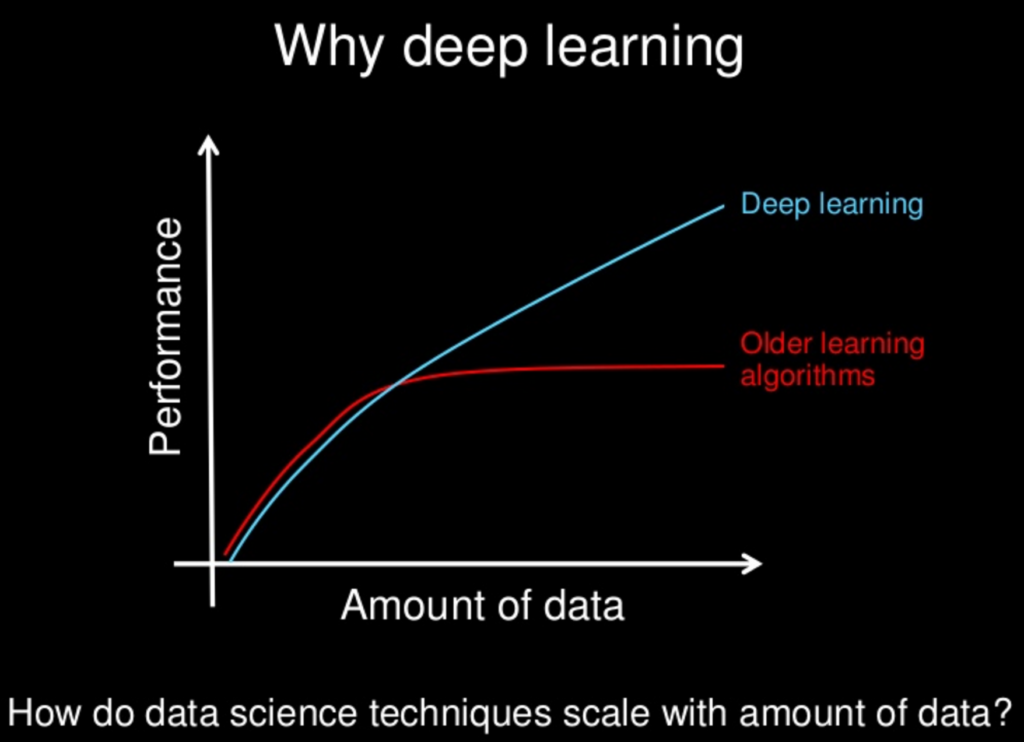

One of differences between machine learning and deep learning model is on the feature extraction area. Feature extraction is done by human in machine learning whereas deep learning model figure out by itself.

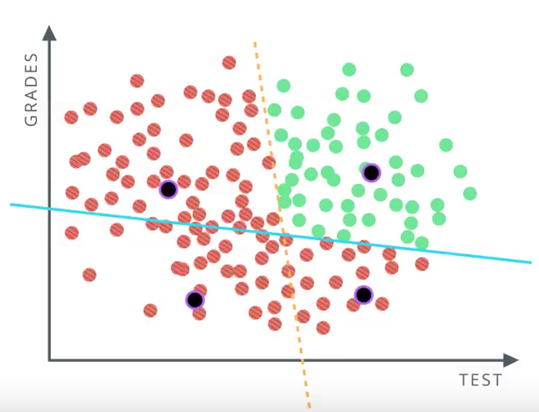

Logistic regression is the technique to be used to separate data using single line. But most of the time we cannot classify the dataset using a single line with high accuracy

Neural Network

As explained above, deep learning is a sub-field of machine learning dealing with algorithms inspired by the structure and function of the brain called artificial neural networks. I will explain here how we can construct a simple neural network from the example. In the above example, Logistic regression is the technique to be used to separate data using single line. But most of the time we cannot classify the dataset using a single line with high accuracy.

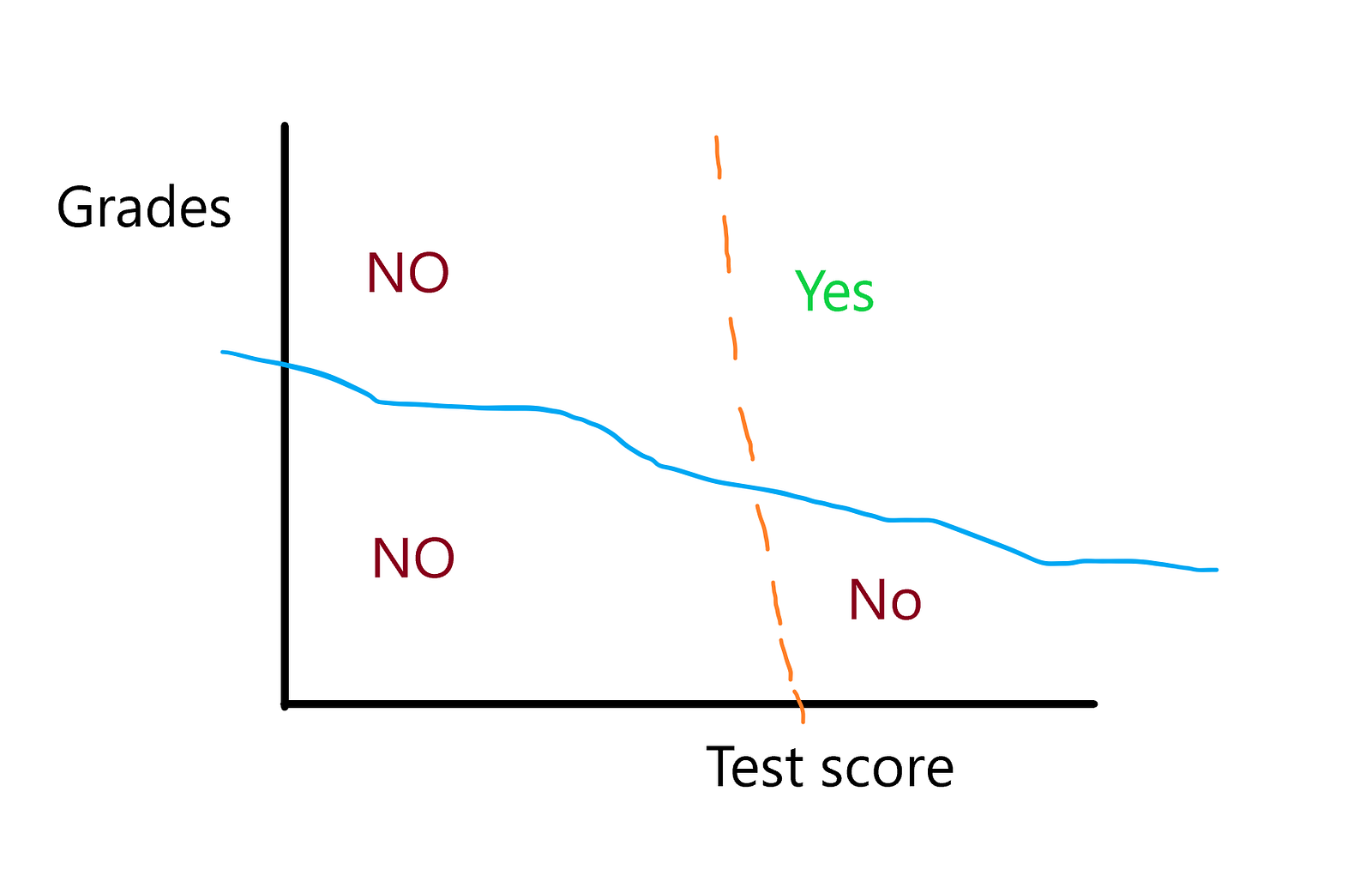

How about if we separate, data points with two lines.

In this case, we say anything below blue line will be “No(not passed)” and above it will be “Yes(passed)”. Similarly, we say anything on the left side will be “No(not passed)” and on the right side “Yes(passed)”.

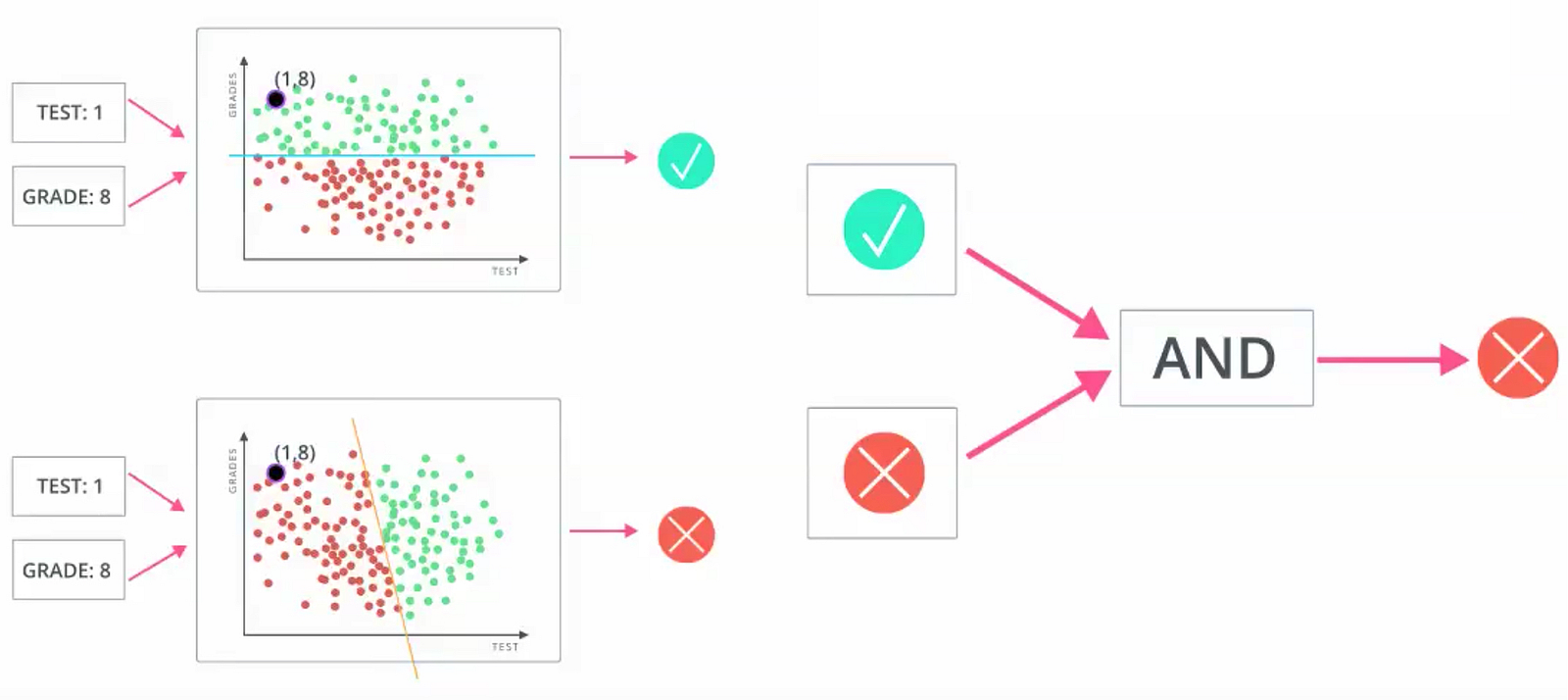

As we have neurons in nervous system, we can define each line as one neuron and connected to next layer neurons along with neurons in the same layer. In this case we have two neurons that represents the two lines. The above picture is an example of simple neural network where two neurons accept that input data and compute yes or no based on their condition and pass it to the second layer neuron to concatenate the result from previous layer. For this specific example test score 1 and grade 8 input, the output will be “Not passed” which is accurate, but in logistic regression out we may get as “passed”. To summarise this, using multiple neurons in different layers, essentially we can increase the accuracy of the model. This is the basis of neural network.

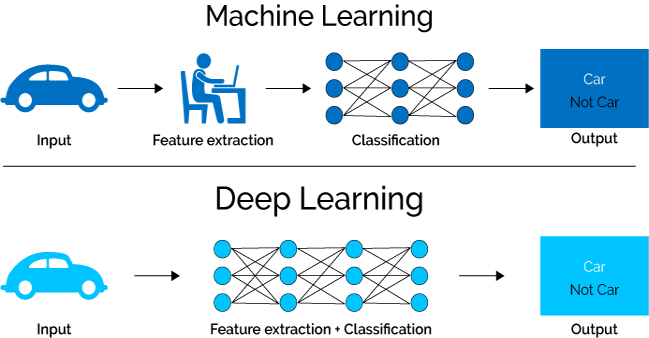

The diagram below shows a simple network. The linear combination of the weights, inputs, and bias form the input h, which passes through the activation function f(h), giving the final output, labeled y.

The good fact about this architecture, and what makes neural networks possible, is that the activation function, f(h) can be any function, not just the step function shown earlier.

For example, if you let f(h)=h, the output will be the same as the input. Now the output of the network is

This equation should be familiar to you, it’s the same as the linear regression model!

Other activation functions you’ll see are the logistic (often called the sigmoid), tanh, and softmax functions.

sigmoid(x)=1/(1+e−x)

The sigmoid function is bounded between 0 and 1, and as an output can be interpreted as a probability for success. It turns out, again, using a sigmoid as the activation function results in the same formulation as logistic regression.

We can finally say output of the simple neural network based on sigmoid as below:

I will touch about learning process of neural networks breifly later, but in depth detail of learning of a particlular model will be explained in coming articles in the series.

Comments